A Deep Dive Framework for Social Sector Professionals to Navigate AI Adoption

Introduction: Why the Social Sector Must Understand AI Personas

Artificial Intelligence (AI) is no longer a futuristic concept – it’s a tool of today. For social sector organizations, AI has the potential to transform program delivery, improve efficiency, enhance fundraising, deepen monitoring and evaluation, and ensure greater equity and inclusion.

But here’s the catch: not everyone in the social sector approaches AI the same way.

Some dive in with curiosity, others with caution. Some want efficiency, others focus on ethical implications. Understanding what kind of AI user you are can help you harness AI more effectively, avoid burnout, and choose tools that align with your mission.

In this This blog we introduce s a thoughtful, research-backed framework to help social impact professionals identify their AI archetype – and take the right next steps.

The Five AI Archetypes in the Social Sector

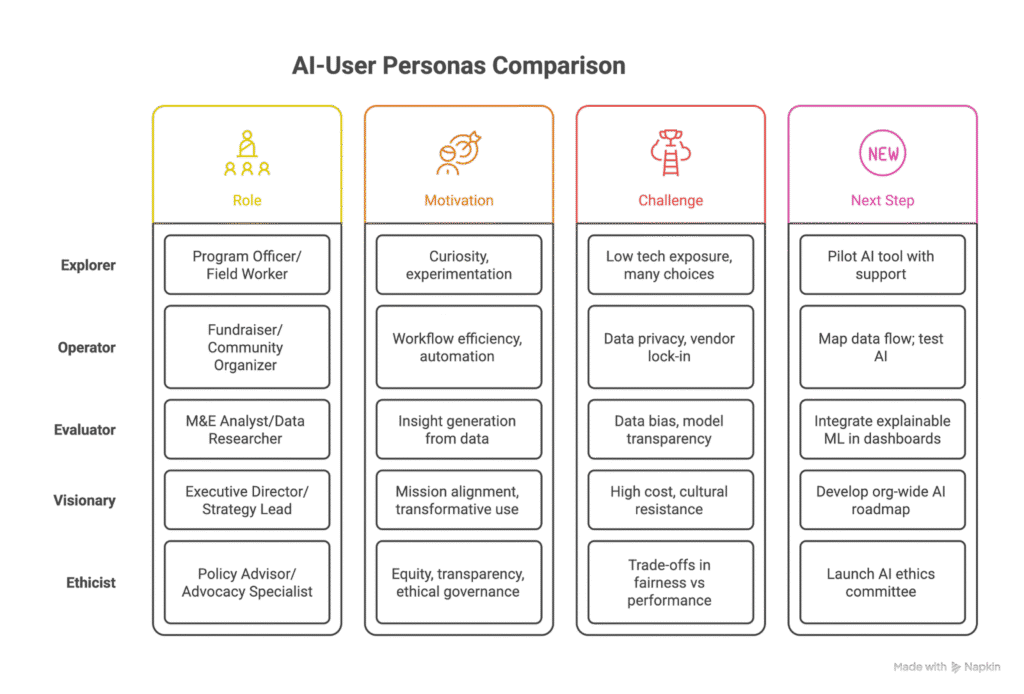

We’ve identified five core AI-user personas based on real-world roles, motivations, challenges, and behavioral patterns across nonprofits, foundations, and social enterprises:

| Archetype | Typical Role | Core Motivation | Key Challenge | Next Step |

| Explorer | Program Officer / Field Extension Team Worker/ Community Organiser | Curiosity, experimentation | Low tech exposure, too many choices | Pilot a chatbot or AI tool with guided support |

| Operator | Fundraiser / Community Organizer/ Events Team | Workflow efficiency, automation | Data privacy, vendor lock-in | Map data flow; test AI for donor segmentation |

| Evaluator | M&E Analyst / Data Researcher | Insight generation from program data | Data bias, model transparency | Integrate explainable ML in M&E dashboards |

| Visionary | Executive Director / Strategy Lead | Mission alignment, transformative use | High cost, cultural resistance | Develop an org-wide AI roadmap |

| Ethicist | Policy Advisor / Advocacy Specialist | Equity, transparency, ethical governance | Trade-offs in fairness vs performance | Launch interdisciplinary AI ethics committee |

1. Explorer: The Curious Pioneer

The Explorer embodies the spirit of experimentation amidst uncertainty. Often rooted in frontline roles—community facilitators, program officers, or outreach workers—this archetype views AI not as a grand transformation, but as a humble aid to deepen human-centered service delivery.

Their use of AI tends to begin with curiosity—piloting a WhatsApp-based chatbot for helpline queries, testing voice-to-text tools for field data collection, or using AI translation in linguistically fragmented geographies. The Explorer’s strength lies in proximity to the community, and their questions are often far more grounded than grandiose: Can this tool help me respond quicker? Can it make my reporting easier? Can it help me listen in better?

Yet this archetype is at risk of digital fatigue. The AI landscape, cluttered with jargon and vendor noise, can overwhelm those without formal data training. Tools can fail when divorced from context. And success requires more than access—it needs anchoring in lived realities.

To advance responsibly, Explorers should:

- Engage with low-code/no-code tools that come with contextual training modules.

- Embed community voices in early feedback loops during AI pilots.

- Partner with academic institutions or volunteer tech networks for hands-on support.

- Measure success not by sophistication, but by relevance: Did it save time? Did it serve people better?

Explorers are often underestimated—but they are the true litmus test of AI’s promise in the social sector. Where they succeed, trust is built. Where they struggle, ethical AI will never scale.

2. Operator: The Efficiency Seeker

Operators reside at the heart of systems. Their daily rhythm pulses with tasks—donor outreach, event management, CRM updates, stakeholder segmentation. Their fascination with AI is utilitarian: a means to reduce redundancy, improve precision, and optimize campaign outcomes.

Yet their work has stakes. Fundraising AI tools that predict “donor likelihood” can also profile people unfairly. Outreach automations can cross ethical lines when personalization becomes manipulation. Operators navigate a fragile tension between efficiency and dignity.

They do not lack initiative—what they often need is structure. Many deploy AI-powered fundraising platforms or volunteer-matching algorithms without a clear audit trail of data flows. In a world governed by data protection laws and rising trust deficits, unexamined automation is no longer excusable.

To move with integrity and precision, Operators should:

- Conduct a data minimization and consent audit before onboarding any AI tool.

- Use interoperable systems to avoid vendor lock-in and future-proof their workflows.

- Pair each AI deployment with a human-in-the-loop checkpoint to preserve relational nuance.

- Shift from “growth hacks” to ethical metrics—not just how many donors opened the mail, but how many felt respected.

Operators may not code, but their choices determine how AI touches lives. And when they act with informed caution, their organizations scale not just faster, but fairer.

3. Evaluator: The Insight Generator

The Evaluator is a cartographer of complexity. Entrusted with extracting meaning from sprawling datasets—across education outcomes, health indicators, social behavior—they turn to AI not for automation, but for amplified intelligence. Predictive models, clustering algorithms, and real-time dashboards are tools in service of clarity.

Yet clarity in social data is elusive. Datasets are often small, sparse, or biased. Algorithms trained on non-representative populations risk reinforcing inequity. Evaluators carry a burden of epistemic responsibility: to ensure that insight doesn’t become illusion.

What sets them apart is discipline. They ask uncomfortable questions of models: Why did it predict this dropout? What variables did it weight? Could it explain itself to a policymaker—or to the parent of the child it flagged?

To uphold rigor, Evaluators should:

- Use open-source AI platforms with transparent architectures and explainability modules (e.g., SHAP, LIME).

- Validate models through triangulated methods—combining AI predictions with qualitative field insights.

- Co-create interpretation frameworks with program teams, making analytics more actionable and less alien.

- Archive every assumption. Build an audit trail. Design for reproducibility.

Evaluators are not just analysts; they are the conscience of AI in the social sector. In their hands, data becomes both mirror and compass.

4. Visionary: The Strategic Architect

The Visionary operates at the altitude of systems and futures. For them, AI is not a tool—it is a strategic force reshaping how organizations imagine their mission, structure their services, and engage the world. They are not content with dashboards; they are sketching new architectures of delivery, new models of impact.

Yet vision is fragile in the face of inertia. Cultural resistance, skill gaps, and uncertainty in returns can derail AI integration. Many Visionaries struggle to translate ambition into institutional buy-in, especially when the workforce fears replacement rather than augmentation.

Their task is to balance imagination with governance—to inspire, but also to scaffold.

To build enduring AI capacity, Visionaries should:

- Conduct a strategic AI readiness audit—spanning infrastructure, leadership mindset, and ethical preparedness.

- Lead cross-functional AI roadmapping with short-, mid-, and long-term milestones.

- Blend build-buy-partner strategies that ensure both innovation and sustainability.

- Frame AI not as a departure from mission, but as a deeper embodiment of it: more reach, better data, faster iteration.

Visionaries do not need to understand every algorithm. But they must master the art of catalytic alignment—between technology and values, between systems and souls.

5. Ethicist: The Equity Guardian

The Ethicist stands as the sector’s sentinel. Their radar is tuned to the unseen harms that AI can inflict—on marginalized communities, unconsenting users, or underrepresented data subjects. They are the ones asking: Who was left out of the dataset? Who benefits from this prediction? Whose values are encoded in this model?

Often operating at the intersection of legal, policy, advocacy, and community engagement, the Ethicist’s role is critical—but often misunderstood. They are not “blockers” of innovation. They are architects of justice within innovation.

Yet their work is fraught. Ethical frameworks for AI in the social sector remain emergent. Tools for bias auditing are nascent. And communities are rarely included in discussions about the tools that may define their access to aid, education, or housing.

To institutionalize equity, Ethicists should:

- Establish an AI ethics governance body that includes beneficiaries, not just technocrats.

- Develop context-sensitive ethical rubrics—e.g., community consent, algorithmic fairness, explainability thresholds.

- Push for open declarations of bias audits, model provenance, and limitations on AI claims in social programs.

- Collaborate across silos: work with Explorers and Operators, not just policy circles.

The future of AI in the social sector hinges not just on what we build, but on how and for whom. The Ethicist ensures we never forget that.

How to Evolve as an AI-Ready Social Professional

Regardless of your archetype, you don’t have to stay static. Here’s how to evolve:

| Current Archetype | Path to Expand Expertise |

| Explorer → Operator | Learn basic data governance and tool evaluation skills |

| Operator → Evaluator | Explore responsible data science and impact analysis |

| Evaluator → Visionary | Gain leadership buy-in and map AI’s alignment to mission |

| Visionary → Ethicist | Ground your roadmap in fairness, transparency, and inclusion |

| Ethicist → Visionary | Translate ethics into scalable governance models |

TAKE THE AI ARCHETYPE TEST NOW

Takeaway: Your Archetype is Just the Beginning

AI in the social sector isn’t just about technology – it’s about values, people, and missions. Recognizing your archetype is a powerful first step, but your journey continues with learning, collaboration, and conscious adoption.

Whether you’re piloting your first chatbot, strategizing an AI-informed theory of change, or crafting a sector-wide ethical policy, know this: you belong in the AI conversation.

At NuSocia, we’ve developed robust frameworks to assess AI maturity across teams and organizations, and have successfully partnered with numerous stakeholders to elevate them from foundational to advanced levels of AI readiness. Get in touch to learn how we can support your journey.