We all know the feeling. You’re on a field visit, energized by the sight of a skilling program in action. The students are engaged, the trainers are passionate. But then you open the MIS dashboard, and the numbers… just don’t seem to match the reality on the ground.

Recently, while reviewing skilling program documents, we stumbled down a rabbit hole of mismatched numbers. It wasn’t malicious; it was just complicated. Continuous programs, overlapping financial years, and elusive phone signals create a perfect storm for data chaos.

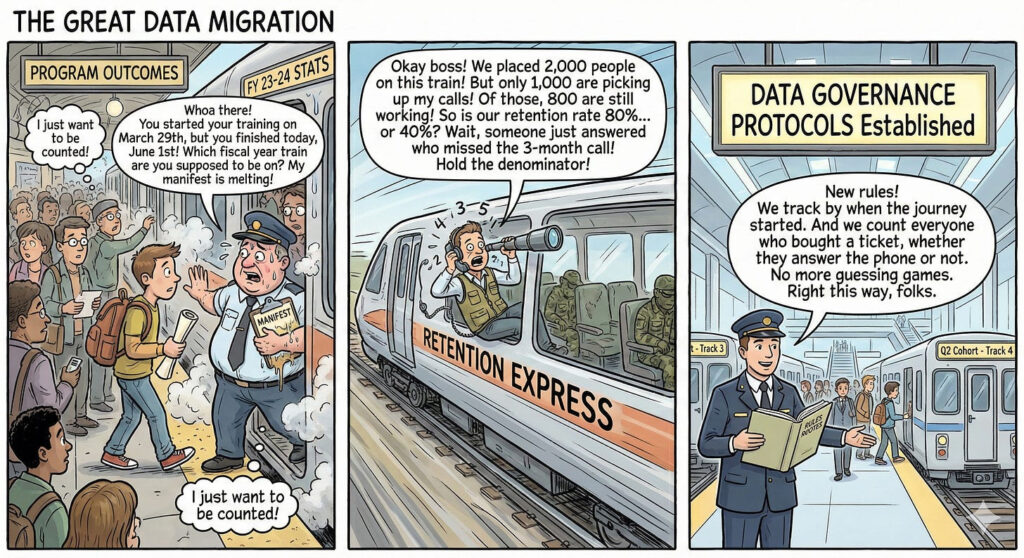

The comic above might seem exaggerated, but it mirrors the exact challenges our field teams face daily.

During our recent observations, we realized that continuous skilling programs don’t neatly fit into administrative boxes.

- If a trainee enrolls in March (one fiscal year) but graduates in June (the next), where do they belong?

- When calculating retention, if our denominator depends on “calls connected” rather than “total placed,” our success rate fluctuates wildly based on phone network availability, not actual program impact.

If we don’t know how we are counting, we don’t truly know what we are achieving.

We cannot build outcome-oriented programs on shaky data foundations. It’s time to move from ad-hoc counting to robust Data Governance.

The Solution: Drawing the Lines

To ensure our data is as credible as our fieldwork, we are implementing stronger governance mechanisms. This isn’t about adding red tape, it’s about creating clarity so the numbers tell the true story.

Moving forward, our approach will focus on three key pillars:

- Fixed Cohort Rules: We must stop chasing moving targets. We will define clear rules for tracking groups over time based on when they started, regardless of when they finish or when a fiscal year ends.

- The “Golden Definitions”: What exactly does “retention” mean? We need a universally agreed-upon denominator. For example, retention should likely be calculated against the total number placed, with non-respondents clearly categorized, rather than shrinking the pool to only those who answered the phone.

- Transparency is Key: Our reports must clearly distinguish between a “confirmed outcome” (we have proof), a “self-reported outcome” (they told us), and “unknown status” (couldn’t connect).

By adopting these standards, we stop herding cats and start counting real impact. Let’s make sure the data we share is as powerful as the work you do.