Large Language Models (LLMs) have ushered in a new era in artificial intelligence. Their capabilities – ranging from understanding and generating human-like language to performing tasks like translation, summarization, content creation, and complex problem-solving – have transformed how industries operate and individuals interact with technology. Integrated into platforms spanning search engines, customer service systems, coding assistants, and enterprise tools, LLMs have rapidly become foundational to the digital economy.

Yet, behind their seamless functionality lies a significant environmental footprint that is often overlooked. The construction and deployment of these models consume vast amounts of energy, emit considerable carbon dioxide, and require substantial water resources. As AI continues to scale globally, its environmental impact is becoming increasingly difficult to ignore.

Training vs. Inference: Two Phases, Different Demands

The lifecycle of an LLM consists of two primary stages – training and inference – each with its unique resource demands.

Training is the process of teaching a model to understand language by exposing it to massive datasets – books, websites, scientific articles, and more. Modern LLMs like GPT variants may contain hundreds of billions to over a trillion parameters, each representing a learnable aspect of language. This phase involves high-performance computing infrastructure – thousands of GPUs or TPUs operating continuously over weeks or months – running sophisticated deep learning architectures such as Transformers.

The computational load during training is immense. Parallel processing methods like model and tensor parallelism are used to distribute the workload, but this still translates into significant electricity consumption and data center overhead. As a result, training a single large-scale model can consume tens of thousands of megawatt-hours (MWh) of electricity, equating to the annual energy use of thousands of average households.

Inference begins once a model is deployed. Every time a user interacts with an LLM – whether through chat, search, or embedded AI features – it processes new input to generate a relevant response. While a single inference task consumes a fraction of the energy required for training, the scale of usage is staggering. With millions of queries served daily, the collective energy consumption of inference operations over time may exceed the one-time training cost.

Energy: The AI Power Demand

The energy consumption involved in AI is not theoretical – it is measurable and substantial. Training early models consumed a few megawatt-hours, but today’s leading LLMs can require tens of thousands. For instance, the training of some large-scale models likely consumed energy equivalent to powering over 1,000 U.S. homes for a full year.

Hardware efficiency plays a pivotal role here. Specialized processors like Nvidia’s A100 or H100 GPUs and Google’s TPUs are optimized for deep learning tasks, but their deployment at scale still demands enormous power input. Architectural advances – such as sparsity in Mixture-of-Experts models – can lower consumption without compromising performance, but such designs are not yet universally adopted.

Inference is no slouch in energy terms either. Even though individual queries may only use between 0.3 and 5 watt-hours depending on complexity, multiplying that by hundreds of millions of daily interactions results in global electricity consumption on the order of hundreds of megawatt-hours – daily.

Carbon Emissions: AI’s Growing Shadow

Electricity consumption inevitably brings carbon emissions, especially when the grid mix includes fossil fuels. Training just one large model can emit hundreds to thousands of metric tons of carbon dioxide equivalent (CO2e), rivaling the lifetime emissions of dozens of cars or multiple transcontinental flights.

Carbon impact is highly dependent on location. Training the same model in a region powered predominantly by fossil fuels results in far greater emissions than in areas where renewable or low-carbon energy dominates. France, with a largely nuclear-powered grid, can train a model with far fewer emissions compared to a similar effort in the U.S. or Asia. Thus, data center siting – choosing where to train and operate models – can dramatically alter environmental outcomes.

Furthermore, carbon emissions are not limited to operational phases. The production of AI hardware – GPUs, servers, and supporting infrastructure – also has an embodied carbon cost. While harder to quantify, this contribution is significant and often left out of mainstream assessments.

Water: AI’s Hidden Thirst

A less discussed, but equally critical, environmental cost is water usage. AI systems require cooling – lots of it. Most data centers use water-based cooling systems, where clean freshwater is evaporated to remove heat from servers. This is known as direct or Scope 1 water usage.

Training a single LLM can evaporate hundreds of thousands of liters of potable water, with total operational water footprints (including indirect water used to generate electricity) reaching millions of liters. The figures are even more staggering when considering inference. Though an individual query might use just over a milliliter of water, the scale of global usage means that daily AI operations can consume millions – or even billions – of liters globally.

Water use varies with climate, cooling technology, and grid energy sources. For example, regions relying heavily on thermal power plants (coal, nuclear, gas) indirectly consume large amounts of water for steam cooling. In warm regions, evaporative cooling becomes more water-intensive, exacerbating strain in already water-scarce areas.

The Long Tail of Inference: Cumulative Impact

While training is a resource-intensive event that happens periodically, inference is a never-ending operation. Every time someone asks a question to ChatGPT, or an AI-powered tool auto-completes a sentence or analyzes a document, the model performs computations that consume energy and, by extension, emit carbon and use water.

Though each of these interactions may be small in isolation, their sheer volume makes them a critical component of AI’s environmental footprint. In fact, over the operational lifetime of an LLM, inference can account for the majority of its total energy and water consumption.

Estimates suggest that ChatGPT alone could consume hundreds of megawatt-hours of electricity and millions of liters of water each day. This transforms what appears to be an efficient, instantaneous digital exchange into a significant contributor to planetary resource consumption.

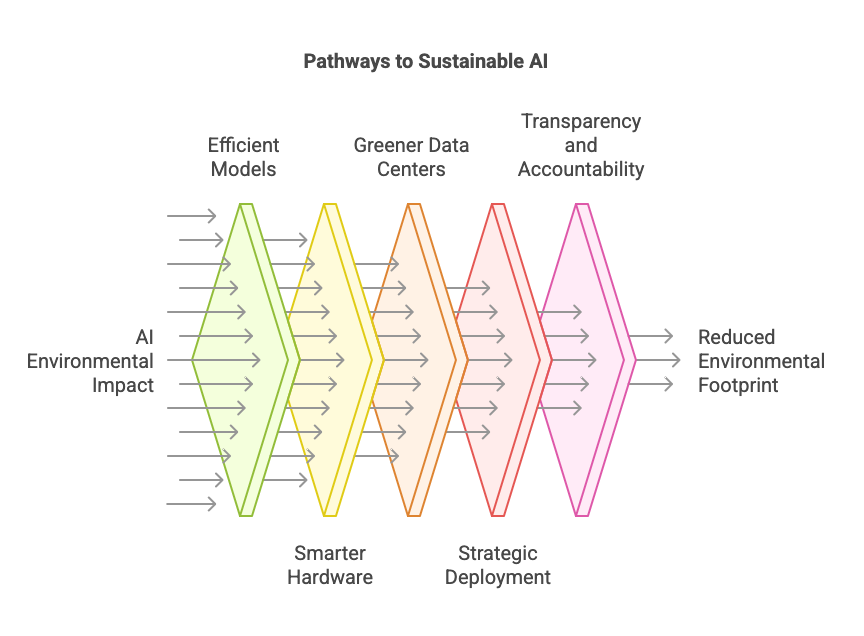

Mitigation Strategies: Pathways to Sustainable AI

Faced with the growing environmental costs of AI, the industry is responding with a range of mitigation strategies. These span from the design of smarter models to the greening of data centers and hardware.

1. Efficient Models and Architectures

- Techniques like pruning, quantization, and knowledge distillation reduce the size and power requirements of models without heavily compromising performance.

- Sparse models activate only select pathways during inference, lowering computation and energy use.

- Smaller, task-specific models are gaining favor over general-purpose giants for many use cases.

2. Smarter Hardware

- Purpose-built AI accelerators like newer-generation GPUs and TPUs offer greater performance per watt, improving energy efficiency.

- Innovations in chip and system architecture reduce overhead and improve thermal performance.

3. Greener Data Centers

- The use of renewable energy to power data centers significantly cuts carbon emissions.

- Leading providers are transitioning toward carbon-free energy portfolios and are optimizing their Power Usage Effectiveness (PUE) to reduce energy loss.

- Water-saving technologies, such as closed-loop cooling and non-potable water reuse, are reducing the water footprint.

4. Strategic Deployment and Lifecycle Planning

- Workload scheduling – running energy-intensive jobs when renewable energy availability is high or temperatures are cooler – can reduce resource demand.

- Lifecycle assessments, which include embodied carbon and water in manufacturing, are helping identify full environmental costs and areas for optimization.

5. Transparency and Accountability

- There is a growing call for companies to publish environmental impact data alongside model performance benchmarks.

- Tools and standards are emerging to provide lifecycle carbon and water assessments, enabling better decision-making and accountability.

Conclusion: Redefining Progress with Sustainability

The age of artificial intelligence is here – but it comes with trade-offs. As LLMs become more powerful and ubiquitous, they also become more resource-intensive. The true cost of intelligence is no longer just in training time or cloud infrastructure bills – it is in electricity grids, cooling towers, and the carbon and water cycles of our planet.

But the trajectory is not set in stone. Through a combination of algorithmic innovation, efficient hardware, clean energy transitions, and responsible policy, it is possible to build a future where AI evolves sustainably.

For AI to remain a force for good, it must be developed and deployed with environmental responsibility as a core principle – not an afterthought. Only then can we unlock the full potential of artificial intelligence without compromising the health of our planet.

– Aviral Apurva